DPS8M Performance

This post aims to show how the performance of systems implementing the 600/6000-series mainframe architecture evolved across hardware generations — and where the DPS8M Simulator fits in. It is not intended as an exhaustive reference for all models, instead focusing on the four generations of machines that ran the Multics operating system.

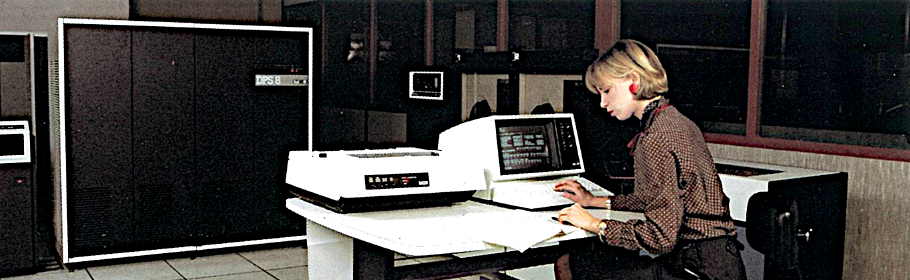

Although exact details are often unclear (and we don’t have original systems available to run new benchmarks), we can present the graphic above, representing the various systems that were used for running Multics, with a very high level of confidence.

Note there were hardware refreshes during the Level‑68 era (where the Multics offering was referred to first as the Series‑60/Level‑68, then as the Series‑60 Level‑68/DPS) that may have affected performance. We don’t have more specific data for these, unfortunately.

- If you aren’t familiar with the Family Tree of GE/Honeywell/Bull 600/6000-series systems, we encourage you to take a look.

The performance of the DPS‑8000, DPS‑88 (which introduced a five-stage pipelined CPU design), DPS‑90, DPS‑9000 (with a seven-stage pipeline), and later systems becomes harder to quantify, with significant variance and often conflicting performance claims between published benchmarks and marketing materials, although we believe the data presented here to be correct.

| GE‑645 | 0.435 MIPS |

| H6180 | 0.87 MIPS |

| Level‑68 | 1.1 MIPS |

| DPS‑8 ∕ 70M | 1.8 MIPS |

| DPS‑8000 | 2.8 MIPS |

| DPS‑88 ∕ 861 | 5.4 MIPS |

| DPS‑90 ∕ 91 | 10.8 MIPS |

| DPS‑9000 ∕ 91 | 40 MIPS |

| DPS‑9000 ∕ 700 | 80 MIPS |

| DPS‑9000 ∕ 900 | 120 MIPS |

Because these later systems never ran Multics (as they lacked the required Multics-specific appending unit additions to the architecture) we are lacking in definitive apples-to-apples benchmarks (e.g. instr_speed, compilation speeds, timing details for known tasks, load reduction after upgrades) that can be used to confirm the data.

The strategy for introducing new models also changed significantly between the 1960s-1970s and the 1980s-1990s. In the earlier decades, Honeywell/Bull typically launched a flagship design with peak performance, followed by detuned and cost-optimized sub-models (which we are not addressing in this post). By the mid-1980s and 1990s, however, the approach had (mostly) reversed, with new sub-models usually (but not always) introduced featuring incremental performance improvements over the predecessors.

As an example, the DPS‑8 line (represented by the flagship DPS‑8/70 and DPS‑8/70M models) were always 1.8 MIPS machines, with sub-models (e.g. DPS‑8/47, DPS‑8/52) detuned in various ways (i.e. disabled cache, slower clock rates), while the DPS‑88/861 was introduced at 5.4 MIPS, with the later sub-models (i.e. DPS‑88/981) reaching 14.6 MIPS. (Data here is conflicting, with some sources listing the introductory model as the DPS‑88/81 at 7.2 MIPS.) The DPS‑9000/91 was introduced at 40 MIPS, with the later DPS‑9000/700 sub-model achieving 80 MIPS, and the high-end DPS‑9000/900 sub-model exceeding 120 MIPS. No public performance claims are known for the (emulation-based) NovaScale or later systems.

Furthermore, Honeywell/Bull did not publish official CPU MIPS ratings, appropriately advising that they presented a limited and often misleading measure of the performance of truly distributed architectures. Floating-point MFLOPS numbers are even harder to come by (although 17.5 MFLOPS was claimed in the 1988 press release announcing the first DPS‑9000 models).

To illustrate the point, a Multics DPS‑8 mainframe would have used as many as six 18‑bit DATANET‑355/6600-series minicomputers acting as front-end communications processors (FNPs). TCP/IP or Chaosnet processing was often further offloaded to LSI-11 minis. A fully-loaded CP‑6 mainframe system running on a DPS‑90 could utilize as many as twelve Level‑6 (DPS‑6) or DATANET‑8 front-ends (and large DPS‑6 configurations were considered capable mainframes in their own right). In addition, TCP/IP processing on a CP‑6 system as described would be offloaded to dedicated XPS 100 (68020) or DPX/2‑300 (68040) minicomputers.

The IOMs (Input/Output Multiplexers, referred to as IMXs on DPS‑8000 and later systems), Tape/Storage/Peripheral Controllers, and the components connected to them were also not “dumb” devices, but capable of doing considerable work without interrupting the main CPU. These systems could handle substantial I/O loads with little stress on the main processor.

MIPS ratings are further complicated as the DPS‑88 introduced vector processing support, and the Extended Instruction Set (EIS) of the 6000‑series included instructions for number formatting, indexing, base conversion, and string operations capable of reducing complex COBOL or PL/I statements to as little as a single main CPU instruction.

So, how does the DPS8M Simulator R3.1.0 rank, running on commonly available consumer hardware?

- Use DPS8M R3.1.0 or later. The latest versions include significant performance optimizations.

- For most Intel-based systems, GCC produces somewhat faster builds than Clang (and other compilers).

- For most ARM64-based systems, Clang produces somewhat faster builds than GCC.

- Use a 64‑bit host system unless you absolutely cannot.

- Use PGO (Profile Guided Optimization) builds whenever possible (see below).

- Follow the advice produced by the simulator’s

SHOW HINTScommand.

- Anything faster than a Raspberry Pi 3B is likely running at least as fast as a real DPS‑8/70M (with no need for special tuning).

- Most modern desktop computers provide an experience equivalent to Multics if it ran on a DPS‑90-class system. (Apple Silicon (e.g. M4) systems seem especially performant).

The DPS8M Simulator offers a range of build options (see the make options output for full details), with the NO_LOCKLESS and NO_LTO options warranting particular attention due to their possible performance implications.

By default, the simulator utilizes multiple threads to support multiprocessor configurations of up to six CPUs, with each simulated CPU executing in an independent thread. Even when simulating a single processor mainframe system, the CPU is executing in a separate thread from I/O.

Systems with a single host processor core or those lacking efficient hardware support for lock-free atomic operations (e.g., older processors or embedded systems) might achieve better performance by using the NO_LOCKLESS option, but will be restricted to simulating single-processor system configurations.

Also, by default, the simulator is built using Link Time Optimization (LTO). This is almost always desirable. If you must disable LTO (.e.g. very low memory) you can use the NO_LTO option, but expect a 15% – 25% runtime performance penalty.

DPS8M R3.1.0 (and later) supports Profile Guided Optimization (or PGO) builds. Scripts are included in the src/pgo directory of the source kits to automate the process.

We highly recommend PGO builds if your system is supported. PGO build performance varies (depending on the operating system, compiler, and processor), but improvements of 30% or more are not unusual.

- Our official packages and binaries for AIX on POWER8+ and Linux on x86_64 systems are built with PGO.

- The Homebrew “bottles” (for macOS/ARM64, macOS/x86_64, and Linux/x86_64) are also PGO-enabled.

PGO builds are currently supported on IBM AIX 7.2 or later with at least Clang 19 or IBM Open XL C/C++ V17.1, Apple macOS 14 (Sonoma) or later with at least Homebrew’s GCC 10 or Clang 19 and LLD 19, and on most other Unix-like (e.g. Linux, FreeBSD, NetBSD, Haiku) systems using at least GCC 10 or Clang 19 (with GCC 12+ and Clang 20+ recommended). Slightly older versions may be sufficient. Experimental PGO support exists for МЦСТ Эльбрус (Elbrus) Linux systems using LCC v1.26 or later.

If you don’t see your preferred operating system and compiler above, please contact us.

If you’ve made it this far, you probably want to know how the simulator performs compared to the historic systems. Read on for a detailed comparison of how the simulator performs on some popular systems vs. the real hardware.

The Qualcomm QCA9531 is a MIPS-based embedded design that can be found in various consumer and industrial embedded modules and routers.

This platform is poorly suited to running the simulator.

Most systems using this SoC have a very small amount of memory, usually 128MB or less. The CPU is an anemic single-core 32‑bit 650MHz MIPS32 74Kc CPU that is both lacking an FPU (requiring a toolchain built with floating-point emulation) and without hardware support for important lock-free atomic operations such as CAS (compare‑and‑swap). This results in extremely poor floating-point performance, and a large performance penalty if using a threaded simulator build.

On a positive note, systems using the QCA9531 do not require passive or active cooling, use very little power at full load (approximately 2W), and include a decent complement of I/O and networking — USB, SPI, GPIO, serial UART, 802.11bgn, 10/100 Ethernet, etc.

However, with the right build options, even this low-end embedded board can run Multics at “native” speeds (at least for integer-based workloads).

In the following graphs, green bars represent historic hardware and blue bars represent the DPS8M Simulator.

-

The actual device tested was a GL.iNet AR750S‑Ext router, available used or refurbished for under $25 USD. It comes equipped with 128MB of RAM and 128MB flash. The factory software is a customized OpenWrt-based Linux distribution. We replaced this with a fresh OpenWrt image built from source.

-

Due to the lack of memory, you’ll be unable to compile the simulator on the device itself, unless you use the

NO_LTObuild option. This is likely the case even withzramconfigured. PGO builds, while possible, can take hours to complete. -

We’ve included benchmarks for PGO and standard builds, both with and without the

NO_LTOand/orNO_LOCKLESS(shown asNL) build options, so you can see how important these are for performance on this type of system. GCC was used for all compilations. -

We highly recommended that instead of building software on a device like this directly, you use a toolchain hosted on another system and cross-compile. QEMU can be used for generating the PGO profile data. If you need help with this, let us know! It’s worthwhile ‑ as you can see, the

NO_LOCKLESSbuild with PGO and LTO enabled benchmarks about 50% faster than without LTO on this platform.

The Raspberry Pi 3B in 32‑bit mode is about the slowest system that we could recommend for serious use, capable of running Multics at a level of performance that is a bit faster than a Honeywell 6180 and a bit slower than a Level‑68. The Raspberry Pi 3B in 32‑bit mode uses the ARMv7‑HF instruction set.

- The 32‑bit versions of Raspberry Pi OS are optimized generically for the ARMv6 ISA (which can run on any Raspberry Pi system), where Pi3B in 32‑bit mode prefers the ARMv7‑HF capable processor.

- Ensuring that DPS8M is built specifically for the ARMv7‑HF ISA (and not ARMv6) results in a measurable performance improvement.

- Only a Clang-based PGO build was tested. Attempting to build GCC-based PGO binaries resulted in linking errors that could not be easily resolved (and also cannot be reproduced on 64‑bit Rpi3B and later systems).

2025‑06‑04 Update: A GCC-based PGO build, produced using a crosstool‑ng-generated GCC 14.3‑STABLE ARMv7‑HF toolchain, achieved 1.00 MIPS—approximately a 2% improvement over the Clang-based PGO build.

The Raspberry Pi 3B (64‑bit) is a nice step up from the Raspberry Pi 3B in 32‑bit mode, capable of running Multics at a level of performance nearly (87%) equivalent to a DPS‑8/70M — and 60% faster than the same system in 32‑bit mode. The Raspberry Pi 3B uses the BCM2837 (ARM Cortex‑A53) SoC running at 1200MHz implementing the ARMv8‑A instruction set.

- As expected for ARM64-based systems, simulator builds using Clang outperformed builds using GCC.

- PGO improved performance by 35% for GCC builds and 45% for Clang builds.

Finally, here are some results for modest Intel x86_64-based systems, all of which managed to best the final Multics-capable platform, the DPS‑8/70M. These tests were performed on all systems using our official release build for Linux, without additional configuration or tuning.

- Even the lowest-end system, an AMD GX‑412TC-based embedded board, can run Multics faster than the DPS‑8/70M.

-

Almost any modern machine will run the simulator with an acceptable level of performance.

-

Even very low-end embedded devices able to offer an experience equal to the historic platform.

-

Amusingly, the 6.36 simulator that preceded the GE‑645, used for early Multics development, was named as such because it was assumed to be about 100× slower than the finished “GE‑636” (the working name for the GE‑645), so we can estimate that it executed approximately 4,350 instructions per second on a GE‑635 — that’s 0.00435 MIPS.

We’ve come a long way since then!

Don’t hesitate to get in touch if you have any questions.